Holographic displays are no longer confined to futuristic movies—thanks to the latest wave of augmented reality smartphones, they’re becoming a tangible part of our daily lives. These devices combine sophisticated optical systems with powerful AI algorithms that map and interpret depth in real time, allowing 3D holograms to appear seamlessly within physical spaces. By understanding spatial context, the phones can anchor virtual objects to real surfaces, making interactions feel intuitive and immersive. This leap forward is redefining how we visualize information, from gaming to design.

What sets these AR smartphones apart is their ability to blend digital content with the physical world without requiring additional headsets or glasses. Using advanced sensors and machine learning, they track hand movements and environmental geometry, enabling users to manipulate holograms as if they were real objects. Companies like Microsoft with their HoloLens research and emerging smartphone manufacturers are pushing the boundaries of what mobile technology can achieve. As these capabilities become more accessible, industries such as education, healthcare, and retail are already exploring practical applications.

The integration of AI-driven depth sensing plays a crucial role in ensuring holograms respond accurately to changes in lighting, perspective, and user input. This real-time processing demands significant computational power, which modern chipsets are now capable of delivering efficiently. For developers and creators, this opens up new possibilities for building interactive experiences that were previously impossible on mobile platforms. Resources from tech leaders like Google’s ARCore are helping accelerate innovation in this space.

While still in early adoption, holographic AR on smartphones signals a shift toward more natural human-computer interaction. As software ecosystems mature and hardware improves, we’re likely to see broader consumer use cases emerge—from virtual home assistants to immersive storytelling. The foundation has been laid, and with continued advancements, holography may soon become as commonplace as touchscreens once were.

The Technology Behind Holographic AR

Modern holographic displays are revolutionizing how we interact with digital content by creating true 3D images that float in space, viewable from nearly any angle—no glasses required. These systems leverage advanced light-field rendering techniques to simulate how light behaves in the real world, giving the illusion of depth and volume. Combined with waveguide optics, which direct light precisely across transparent surfaces, and ultra-high-resolution micro-displays, they produce sharp, lifelike holograms that appear seamlessly integrated into the physical environment. This technology is a major leap beyond traditional screen-based displays, bringing us closer to the kind of interactive visuals once seen only in science fiction. For more on how light-field technology works, check out resources from Nature Photonics.

One of the most exciting applications of this tech is in augmented reality (AR) smartphones, where holograms aren’t just seen—they can be touched, rotated, and resized using natural hand gestures. These devices come equipped with specialized sensors like time-of-flight cameras and LiDAR scanners, paired with powerful neural processing units (NPUs) that interpret spatial data in real time. This allows the phone to map your surroundings and track finger movements with remarkable precision, making interactions with holograms feel intuitive and responsive. Companies like Apple and Samsung have already integrated such sensors into their latest devices, paving the way for widespread adoption of gesture-controlled AR experiences. Learn more about AR depth sensing from Apple’s ARKit documentation.

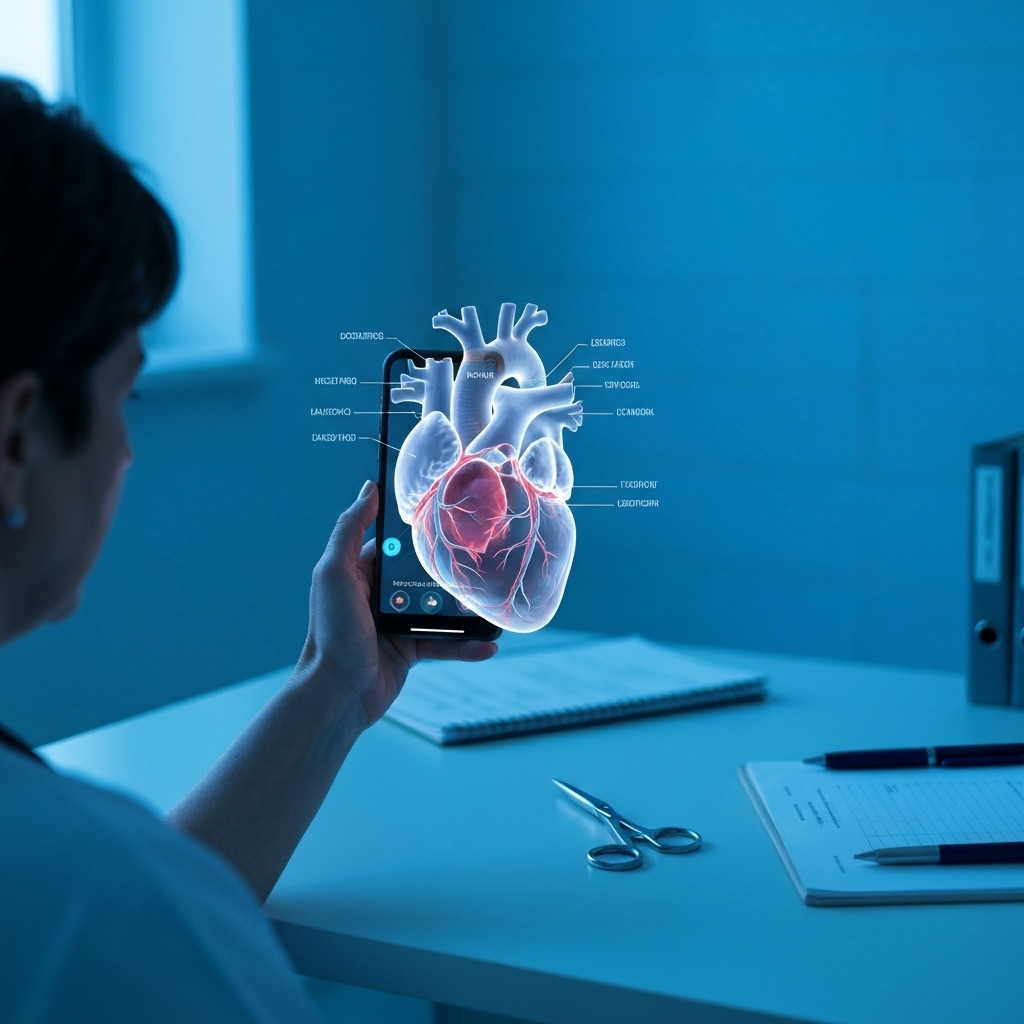

What sets these systems apart is their ability to blend computer-generated imagery with the real world so convincingly that users begin to perceive holograms as tangible objects. By continuously updating the display based on user perspective and hand position, the illusion of physical presence is maintained dynamically. This opens up possibilities across industries—from medical training, where students can manipulate 3D models of organs, to remote collaboration, where engineers can examine and modify holographic prototypes together in real time. The underlying software often relies on frameworks like Unity or Unreal Engine, optimized for spatial computing environments.

As processing power increases and optical components become more compact, we’re likely to see holographic AR move beyond smartphones into wearables like smart glasses. However, challenges remain in balancing image brightness, field of view, and battery efficiency. Despite these hurdles, the progress so far suggests that holographic interfaces may soon become a standard part of how we interact with technology. For ongoing research and development updates, organizations like Microsoft HoloLens continue to push the boundaries of what’s possible in mixed reality.

Applications Across Industries

Holographic augmented reality (AR) is transforming how professionals across industries interact with digital content in real-world environments. In healthcare, surgeons are leveraging AR to project 3D holograms of patient anatomy, allowing for more precise preoperative planning and improved outcomes. These interactive models enable medical teams to explore complex structures like the heart or brain from every angle, enhancing both training and surgical accuracy. Institutions such as the Mayo Clinic have begun integrating AR technologies into their workflows, showcasing the potential of spatial computing in medicine Mayo Clinic.

Architects and designers are also benefiting from holographic AR by offering clients immersive walkthroughs of unbuilt spaces. Instead of relying on blueprints or flat renderings, professionals can now place life-sized holographic models directly into physical environments using devices like Microsoft HoloLens. This not only improves client understanding but also helps identify design issues early in the process. Firms like Foster + Partners are pioneering these techniques, demonstrating how AR enhances collaboration and visualization in architecture Foster + Partners.

In the entertainment space, holographic AR is redefining how users engage with games and media. Gamers can now interact with characters that appear to inhabit their living rooms, blending digital storytelling with physical surroundings. Platforms like Magic Leap and apps developed for AR headsets are pushing the boundaries of immersive experiences, making science fiction a tangible reality. As hardware becomes more accessible, we’re likely to see even broader adoption across education, retail, and remote collaboration.

Consumer Adoption and Market Growth

Major tech companies are now embedding holographic capabilities directly into their flagship smartphones, marking a significant leap in consumer technology. These devices leverage advanced augmented reality (AR) hardware and software to project interactive 3D visuals into real-world environments, making immersive experiences more accessible than ever. With built-in AR development kits, manufacturers are empowering developers to create rich holographic applications without relying on external tools or specialized equipment. This shift is lowering the barrier to entry for both creators and users, fueling rapid innovation across industries.

The integration of AR development platforms, such as Google’s ARCore and Apple’s ARKit, allows app developers to build sophisticated holographic experiences tailored for education, navigation, and remote collaboration. Students can explore 3D models of human anatomy overlaid on their desks, while professionals use spatial interfaces for virtual meetings with life-like avatars. Even social media apps are evolving, enabling users to share holographic messages and interactive filters that respond to movement and depth.

As consumer adoption accelerates, we’re seeing a growing ecosystem of apps that turn everyday smartphones into portals for spatial computing. Retailers, for instance, use holograms to let customers visualize furniture in their homes before purchasing. Meanwhile, city planners and architects employ these tools for real-time 3D modeling during site visits. The convergence of powerful mobile processors, improved sensors, and cloud-based rendering is making these experiences smoother and more reliable.

This trend isn’t just about novelty—it’s reshaping how people interact with digital information. As holographic features become standard in high-end devices, user expectations are shifting toward more intuitive, visually engaging interfaces. According to industry analyses from sources like Statista, the AR market is projected to grow significantly over the next five years, driven largely by smartphone-based solutions. With continued investment and developer support, holographic smartphone technology could soon become an essential part of daily digital life.

Challenges and Future Prospects

While holographic technology has made impressive strides, several technical challenges still stand in the way of widespread adoption. One of the most pressing issues is battery consumption—rendering high-resolution 3D images in real time demands significant power, which can quickly drain mobile and wearable devices. Alongside this, heat management poses another obstacle, as the intense computational load generates excess thermal output that could affect device performance and user comfort. These limitations highlight the need for more efficient hardware solutions before holography can transition smoothly into everyday use.

Another critical barrier is the lack of optimized content creation tools tailored specifically for holographic displays. Most current 3D modeling software isn’t designed with real-time holography in mind, making it difficult for developers and designers to produce immersive experiences efficiently. As a result, there’s growing demand for accessible platforms that simplify the workflow from concept to projection. Companies like Microsoft have begun exploring these spaces with mixed reality development kits, but broader industry standards are still evolving.

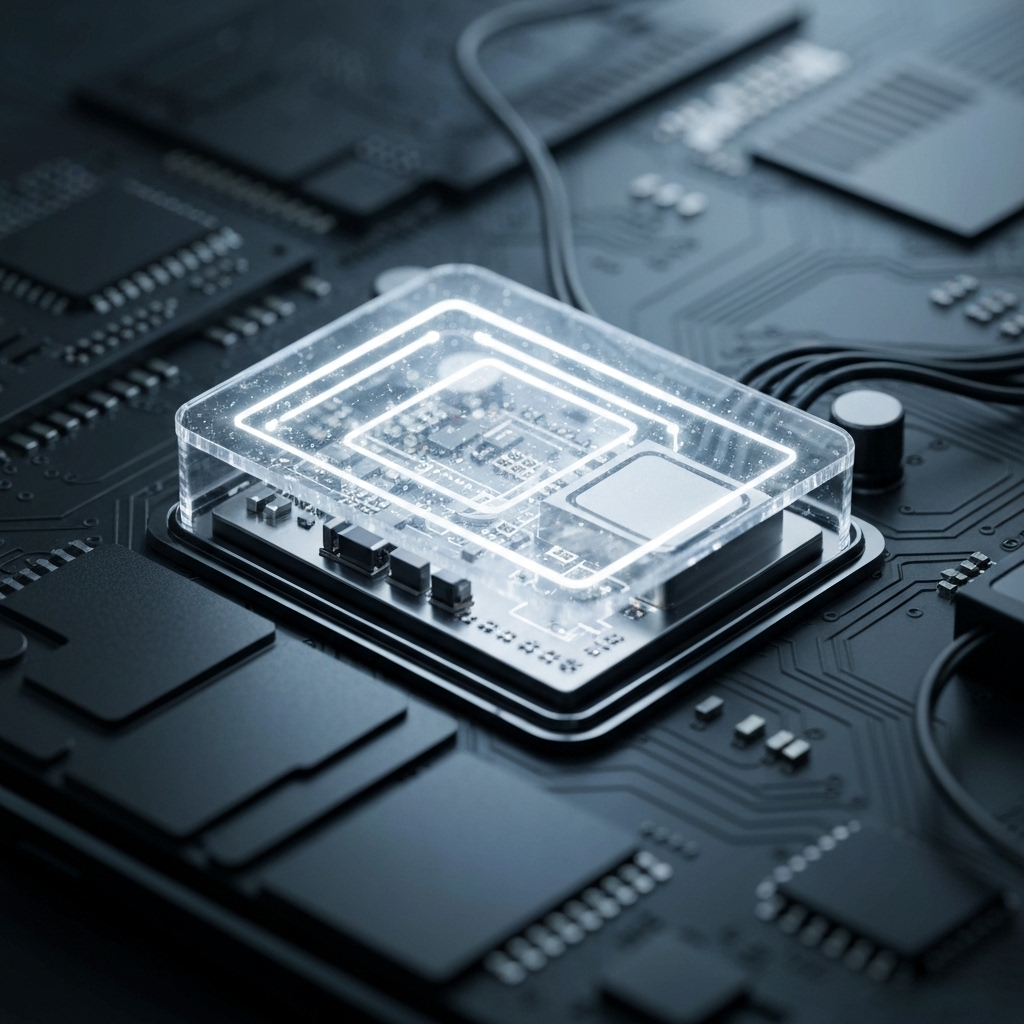

Despite these hurdles, breakthroughs in photonic chips are paving the way forward. Unlike traditional electronic processors, photonic chips use light to transmit data, enabling faster processing speeds with lower energy consumption—a perfect match for holographic workloads. Combined with advances in AI-driven compression algorithms that reduce the massive data requirements of 3D imagery, these innovations are dramatically improving feasibility. According to research published by institutions such as MIT (MIT), such technologies could cut latency and power usage by up to 70%, accelerating real-world deployment.

Experts now believe standalone holographic displays—devices that project 3D images without external sensors or headsets—could become mainstream within five years. This prediction is backed by ongoing investments from tech giants and startups alike, all racing to bring seamless, glasses-free holography to consumer markets. As these technologies mature, they promise to transform industries ranging from telemedicine to entertainment, making once-science-fiction experiences part of daily life.

Conclusion: A New Dimension in Mobile Experience

Imagine pointing your smartphone at a city street and instantly seeing restaurant reviews, historical facts, or navigation cues layered over the real world—this is the promise of augmented reality (AR) smartphones. Unlike traditional screens that confine content to a flat plane, AR transforms your device into a window into an interactive 3D environment. Companies like Apple and Google are already integrating advanced AR capabilities into their mobile operating systems, making spatial computing more accessible than ever.

Holographic displays are taking this a step further by projecting 3D images into physical space without the need for special glasses. While still emerging, startups and research labs are pushing these displays into consumer applications—from virtual home assistants that appear on your nightstand to interactive 3D video calls. The shift from screen-based interaction to immersive, spatial experiences marks a fundamental change in human-computer interaction.

What makes AR smartphones particularly powerful is their ability to blend digital content with real-time environmental awareness. Using sensors, cameras, and AI, these devices understand depth, lighting, and movement, allowing virtual objects to behave realistically within physical spaces. This isn’t just about gaming or entertainment; industries like education, retail, and healthcare are beginning to leverage AR for training simulations, virtual try-ons, and even surgical planning.

As these technologies mature, the line between digital and physical will continue to blur. The future of mobile interaction isn’t confined to tapping and swiping—it’s about reaching out and engaging with information as if it were part of the world around us. With continued innovation, AR smartphones and holographic displays could soon become as essential as the touchscreen was over a decade ago.